Lemurian Labs, a Toronto, Canada-based AI computing solutions provider, recently raised $9M in funding. In conjunction with the announcement, CEO Jay Dawani joined us to answer our questions about the company, its solutions, the funding, and future plans.

FinSMEs: Hi Jay, can you tell us a bit more about yourself? What’s your background?

I went to university and majored in applied mathematics but have spent the majority of my career in artificial intelligence and robotics. I’ve always been attracted to big and hard problems and that mostly led me to startups. Before co-founding Lemurian Labs, I served in a number of leadership roles at companies, including BlocPlay and Geometric Energy Corporation. I’ve been fortunate to have been given the opportunity to spearhead projects covering a wide spectrum of technologies, including quantum computing, metaverse, gaming, blockchain, AI, various modalities of robotics, and space. The exposure to this breadth of technologies and experts in them has allowed me to become a polymath and explore ideas at the intersections of these fields and ask “If this was solved what might it enable us to do that we cannot do today”. Additionally, I’ve had the privilege of serving as an advisor to organizations like NASA Frontier Development Lab and SiaClassic, as well as collaborating with leading AI firms. Beyond my professional endeavors, I’m also the author of the book “Mathematics for Deep Learning,” which I wrote to make AI more accessible by making it less intimidating to newcomers.

FinSMEs: Let’s speak about Lemurian Labs. What is the market problem you want to solve? What is the real opportunity?

At Lemurian Labs, we believe in the transformative benefit that AI can have on our lives. In fact, we started as an AI company, but when we started thinking about how this technology would get deployed and how consumers would interact with it, we had a moment of realization. Models would continue to get bigger and therefore more computationally intensive, but the computing platforms we rely on were not progressing at the necessary rate. Additionally, the SW stacks the industry relies on are extremely brittle. This becomes very problematic as more companies enter the arena to build ever more capable AI models and start deploying them at scale. A lot of these applications will run in data centers and these infrastructures are power bound, which means more data centers will need to be built. Without a first principles rethink of computer architectures and the software infrastructure, it is fairly easy to see how data center energy consumption can grow tenfold where it is today. The rapid growth of AI models is already stressing our current hardware infrastructure to the point of fracture, resulting in exorbitant costs, excessive power consumption, and environmental concerns. If we keep on this path, we’re looking at a future where only a select few companies have the ability to train state of the art AI models – creating a potentially dangerous “AI divide.” We believe everyone should have access to AI, not a privileged few, and to achieve this requires thinking about the problem differently.

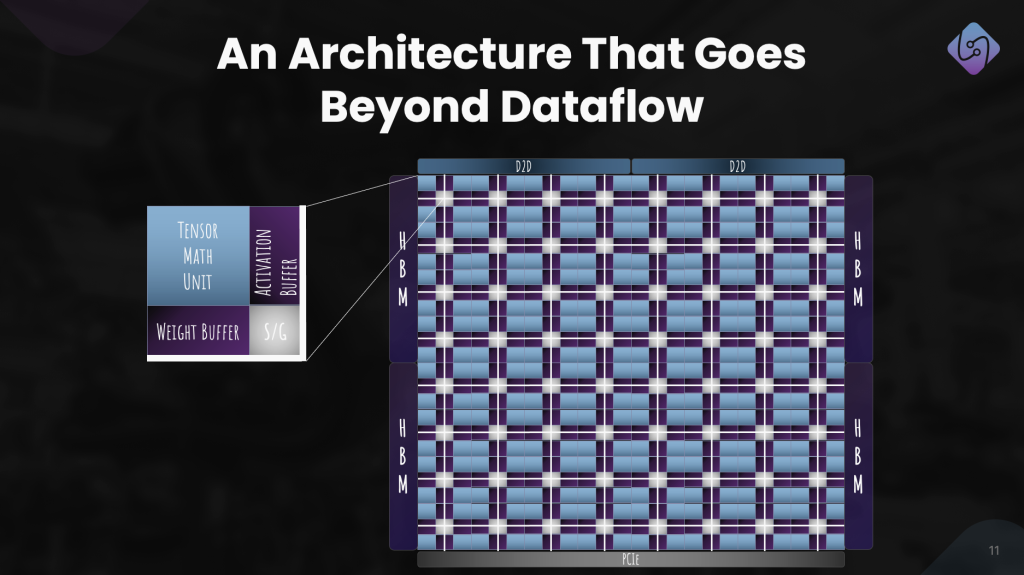

To us, the real opportunity lies in fundamentally transforming the economics of AI. Even the largest companies in the world are struggling to obtain and afford the compute power they need. With our focus on efficiency, utilization and scalability, we are developing an accelerated computing platform that is not only more performant in the same energy budget, but also makes it easier for developers to extract more performance from this hardware. To aid in this effort, we developed a new way of representing numbers and doing arithmetic. It is called PAL (parallel adaptive logarithm) and it enabled us to design a processor capable of achieving up to 20 times greater throughput compared to traditional GPUs on benchmark AI workloads. With this, we aim to expedite workloads while broadening access and fostering innovation, adoption, and equity.

FinSMEs: What are the features differentiating the product from competitors?

The biggest differentiator is our focus on software. We came at this from the ML engineers’ perspective thinking about the workloads that exist today, how they may evolve over time, how they will be deployed, and then progressively went lower through the stack until we reached hardware to fully understand how all the different pieces fit together and how we ended up here. Once we had a holistic view, we then asked ourselves what better might look like. It is with this understanding that we started thinking about our accelerator, and in fact, we started working on our software stack before we even knew the kind of computer architecture we would pursue building. We don’t believe in technology for technology’s sake, we’re a very product focused company and that means focused efforts that solve real customer problems.

It is this kind of thinking that got us to realize a computing platform capable of delivering up to 20 times the AI workload throughput compared to legacy GPUs, which not only moves the needle in terms of energy efficiency but also cost-effectiveness. A lot of folks talk about responsible and safe AI deployment, but that also includes power consumption and carbon emissions, which is something the industry has not spoken as much about.

So in essence, we’re not just improving upon existing technology because after a certain point in time staying in a certain paradigm starts resulting in diminishing returns; it’s kind of like a rubber band, it can only be stretched so far. The path forward requires reorienting accelerated computing for energy efficiency, utilization, and developer productivity.

FinSMEs: You just raised a new funding round. Please, tell us more about it.

We did in fact recently secure $9 million in seed funding, led by Oval Park Capital, with significant participation from Good Growth Capital, Raptor Group, Streamlined Ventures, and Alumni Ventures, among others. This funding underlines the potential of our technology and its transformative impact on the AI industry. We’re grateful for our investors’ confidence in our mission, and our team which has expertise in various aspects of the problem from their prior experiences at NVIDIA, AMD, Google, Microsoft, and others. This funding will be instrumental in proving our core technology as we move towards expanding AI’s benefits to a broader audience.

Our focus is on pushing the boundaries of processing power, and this round of funding will be pivotal in helping us achieve our goals. We’re excited about the journey ahead and the impact we can make on the AI landscape.

FinSMEs: Can you share some numbers and achievements of the business?

Our key achievements and figures include:

- Seed Funding: As previously mentioned, we recently secured $9 million in seed funding. This investment demonstrates strong investor support and underscores the significant interest and confidence in our vision for democratizing AI and redefining the economics of AI development.

- Performance Gain: One of our most notable achievements is the substantial performance gain we’ve achieved with our accelerated computing platform, up to 20 times greater throughput for AI workloads compared to legacy GPUs.

- Cost Reduction: We’ve managed to achieve these remarkable performance gains while reducing the cost to just 1/10th of the total cost of traditional hardware. This cost-effectiveness is a game-changer, making AI development accessible to a broader range of companies and individuals.

- Environmental Sustainability: Our approach significantly reduces power consumption, contributing to a more eco-friendly approach to AI development. In a world increasingly concerned about environmental impact, this achievement is particularly noteworthy.

- Team Expertise: We’re proud of our team’s expertise, which includes professionals from industry giants like Google, Microsoft, NVIDIA, AMD, and Intel. Their experience and commitment are integral to the success and innovation at Lemurian Labs.

- Mission Impact: Our mission is to democratize AI and make AI development affordable and accessible to a wider audience. By achieving these remarkable performance gains at a lower cost, we’re advancing this mission, redefining the boundaries of processing power, and enabling precise and energy-efficient AI solutions for nearly any use case.

FinSMEs: What are your medium-term plans?

Our first goal is to create a software stack that can target CPUs, GPUs, and AI accelerators such as ours, which we will be making available next year to some of our early partners. And, we have a roadmap for it well beyond that as well which includes open sourcing it.

We have started to engage with a spectrum of businesses and organizations building and deploying AI solutions, offering them access to the capabilities of our technology. This effort will involve strategic collaborations, partnerships, and outreach initiatives designed to ensure our solution is accessible to a more extensive audience.

We are improving the architecture of our accelerator and we will be taping it out in early 2025 and making that available to early customers later that year. However, we are in conversations with companies interested in licensing our number format and our architecture to aid with their own chip design efforts for both training and inference in the data center and at the edge.

All this is in line with our mission to democratize AI and help change the landscape so that this advanced technology is available to a wider and more diverse range of users.

FinSMEs

13/11/2023